It’s been quite a while since I last posted on here, and interestingly about the same topic: code coverage analysis in Firefox extensions. And since then Firefox has gotten a completely new extension system. I’ve been really busy porting my extensions and not writing blog posts.

The add-on SDK conveniently came with a test runner built into the JPM command line tool. WebExtensions don’t, which forces the choice of test runner on the developer.

If you use a traditional test runner, you’re probably not really struggling with code coverage. You may have had to do a little bit of research to properly mock the environment of the test runner, so your extension code can run in it, most likely ending up with something that exports the DOM globals from JSDOM and some stub of the WebExtensions API (my choice is sinon-chrome). And then you’re done.

const browserEnv = require("browser-env"); const browser = require("sinon-chrome/webextensions"); browserEnv(); global.browser = browser;Trouble is, I like the fancy modern test runners, which run tests in parallel. Of course we could use the global injection approach there. However we would sacrifice running tests in parallel inside a single test file. While we may still be able to run multiple test files at once depending on the test runner (my choice is ava).

So how can we run them in parallel then? The extension files all need a window global and a

browser/chromeglobal. Sure, but luckily JSDOM by default doesn’t export the window global to the node global, instead it creates a sandbox. And into that sandbox we also inject the WebExtension API stubs and whatever DOM APIs we need that JSDOM doesn’t come with. Finally we execute the file to test in that sandbox (usingeval) and pass in the window as context to the test.const fs = require("mz/fs"); const { JSDOM } = require("jsdom"); /** * Creates sandbox with DOM and WebExtensions APIs and loads instrumented files in it. * * @param {[string]} files - Paths of files to load in the DOM sandbox. * @param {string} [html="<html>"] - Document to create the DOM sandbox around. * @returns {object} JSDOM global the files are loaded in. */ const getEnv = async (files, html = "<html>") => { const dom = new JSDOM(html, { runScripts: 'outside-only', virtualConsole }); dom.window.browser = require("sinon-chrome/webextensions"); // Purge that instance of the browser stubs, so tests have their own env. delete require.cache[path.join(__dirname, '../node_modules/sinon-chrome/webextensions/index.js')]; for(const file of files) { dom.window.eval(await fs.readFile(path.join(__dirname, file), 'utf8')); } return dom; };Sounds simple enough, and results in us getting a sandbox per test. A lot of overhead, just to run tests in parallel. We haven’t even started with overhead though.

Like in the article for the add-on SDK, to get coverage, files have to be instrumented and coverage has be read from them. This setup isn’t doing that yet. Instead it currently loads the tested files using node’s

fsmodule (actually, using a promisified version) and then evaluates them inside the sandbox. At no point could my coverage tool of choice, nyc, instrument them or read coverage.To sum up, we have to manually instrument the files when loading them using nyc and then manually export the coverage results so nyc can generate a report. Now, the instrumenting bit is actually an API nyc offers. Trouble is the reading the coverage bit.

Let’s start with the simple task, instrumenting. The following code snippet replaces the

readFilecall in thegetEnvfunction from before. It only instruments files using nyc when nyc is being used to run the tests, else it just reads the file.const fs = require("fs/mz"); const cp = require("child_process"); const util = require("util"); const ef = util.promisify(cp.execFile); /** * Returns the source of the specified file, instrumented when running nyc. * * @param {string} sourcePath - Path to the file that should be loaded. * @returns {string} Potentially instrumented source code of the given file. */ const instrument = async (sourcePath) => { if(!instrumentCache.has(sourcePath)) { if(!process.env.NYC_CONFIG) { const source = await fs.readFile(sourcePath, 'utf8'); instrumentCache.set(sourcePath, source); } else { const instrumented = await ef(process.execPath, [ './node_modules/.bin/nyc', 'instrument', sourcePath ], { cwd: process.cwd(), env: process.env }); instrumentCache.set(sourcePath, instrumented.stdout.toString('utf-8')); } } return instrumentCache.get(sourcePath); };Sadly we can’t just share the coverage global with the JSDOM scopes. Instead we have to manually collect the coverage and hand it over to nyc.

Luckily nyc reads all “.json” files in the “.nyc_output” directory as coverage results. Thus we can just write the coverage results to a uniquely named JSON file in that directory once tests are done (oh, and we close the window to clean up all garbage):

const fs = require("mz/fs"); const mkdirp = require("mkdirp"); const util = require("util"); const mk = util.promisify(mkdirp); /** * Saves coverage to disk for nyc to collect and cleans up the JSDOM sandbox. * * @param {JSDOMWindow} window - Window global of the sandbox. Window property * on the global getEnv returns. * @returns {undefined} */ const cleanUp = async (window) => { if(process.env.NYC_CONFIG) { const nycConfig = JSON.parse(process.env.NYC_CONFIG); await mk(nycConfig.tempDirectory); await fs.writeFile(path.join(nycConfig.tempDirectory, `${Date.now()}_${process.pid}_${++id}.json`), JSON.stringify(window.__coverage__), 'utf8'); } window.close(); };Update (2018-11-10): in nyc 13.1.0 tempDirectory was changed to tempDir.

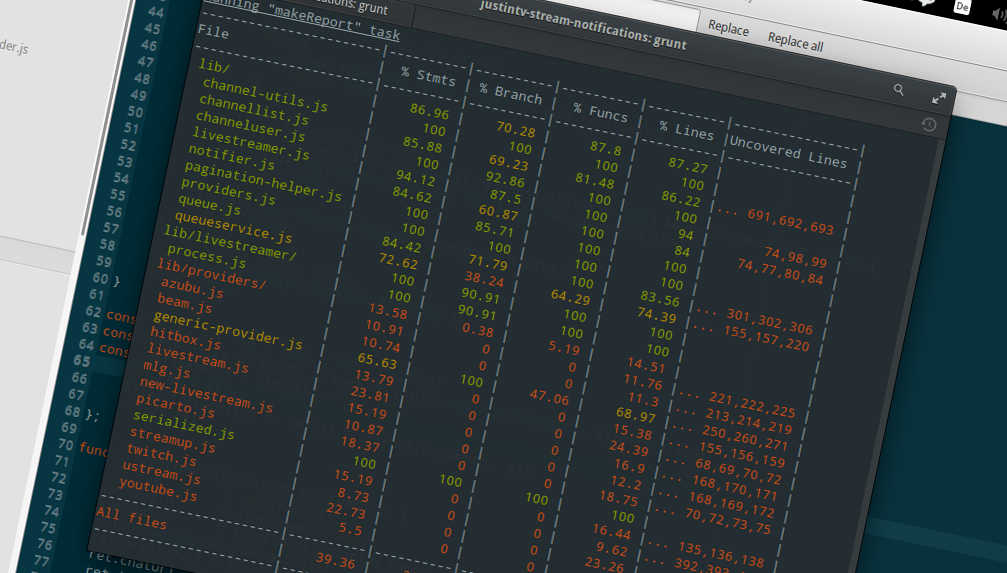

Code in this article are excerpts from the test suites I use for two of my extensions. Advanced GitHub Notifier has the fancy one we built throughout this article, which essentially creates a sandbox per test and Live Stream Notifier uses a global scope-polluting setup. Both setups have their drawbacks. Polluting the global environment means that tests depending on the state of the API stubs or similar have to run in series. However it is much faster than initializing a whole DOM sandbox for every test and requires a lot more setup.